Deep Learning: Cats vs Dogs Image Classification with Convolutional Neural Network (CNN)

Project by: Madan Timilsina

Technologies: TensorFlow, Keras, MobileNetV2, WSL2, CUDA

GitHub: Github madantimilsina

PDF view: PDF document

How the program works?

Table of contents

1. Introduction

Project overview

Motivation

Key Achievement

2. Technical setup

Environmental configuration

Python Virtual environment

WSL2 and NVDIA CUDA integration

Tools & Libraries

Critical Dependencies

GPU Verification

3. Dataset preparation

Directory Structure

Training and Validation Paths

Dataset Splitting Strategy (80/20)

Data Augmentation

Techniques Applied (Rotation, Flipping, Zoom)

Normalization Process

4. Model Architecture

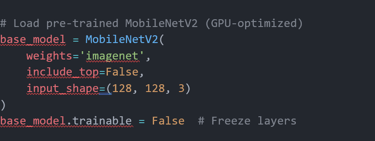

Transfer Learning with MobileNetV2

Base Model Configuration

Freezing Layers

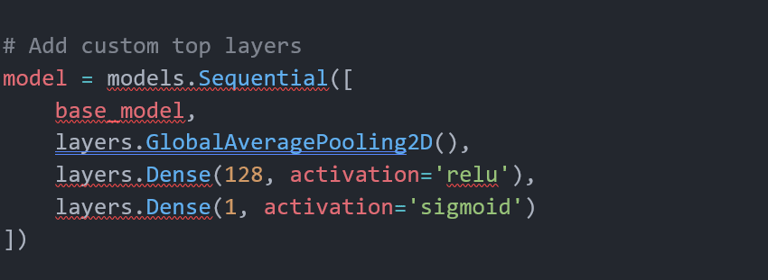

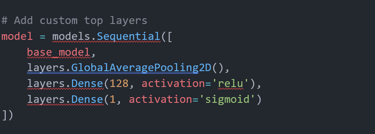

Custom Classification Layers

Dense Layers and Activation Functions

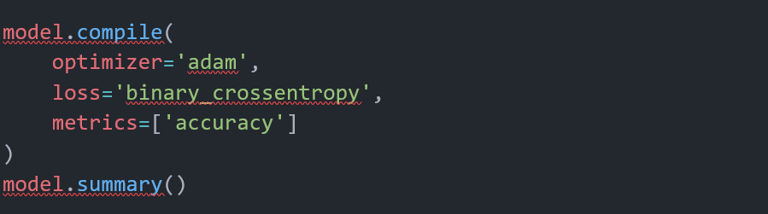

Model Compilation

Optimizer Selection (Adam)

Loss Function and Metrics

5. Training & GPU Acceleration

Training Configuration

Batch Size Optimization

Epoch Strategy

GPU Utilization

WSL2 CUDA Setup

Performance Benchmarks (CPU vs. GPU)

1. Introduction

The classification of cats and dogs in images is a foundational challenge in computer vision, often serving as a gateway to understanding deep learning and convolutional neural networks (CNNs). While seemingly simple, this task encapsulates core principles of image recognition, such as feature extraction, transfer learning, and model optimization. Distinguishing between these two species requires the model to recognize subtle patterns in fur texture, ear shapes, and facial structure. This project will be mine foundation for future work in medical imaging or autonomous systems where image classification is critical.

This documentation reflects my hands-on journey from setup to deployment which emphasizes both problem-solving and technical growth

Project overview

To develop a Convolutional Neural Network (CNN) using transfer learning (MobileNetV2) to classify images of cats and dogs, leveraging GPU acceleration via WSL2 and CUDA

Motivation

Learn deep learning fundamentals from scratch with no prior programming experience.

Explore GPU-accelerated training to optimize performance.

Demonstrate end-to-end project execution in a real-world environment.

Key Achievement

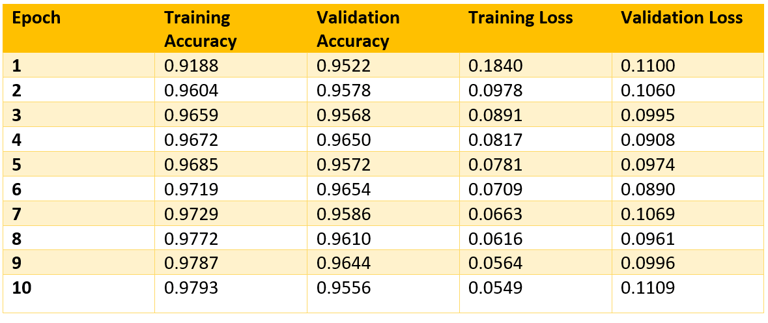

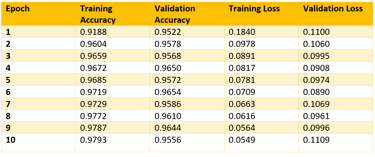

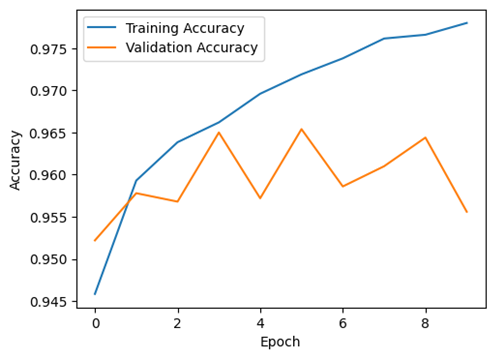

Accuracy: Achieved 95.56% validation accuracy in 10 epochs.

GPU Utilization: Reduced training time by 80% using NVIDIA CUDA on WSL2.

Deployment: Saved the trained model as cats_vs_dogs_gpu.h5 for future use.

2. Technical Setup

Environment Configuration

OS: Windows 11 with WSL2 (Ubuntu 22.04.5 LTS).

Python Virtual Environment:

Path: F:\System files\Python\CNN\my_cnn_project\myenv.

Activated via terminal: (myenv)

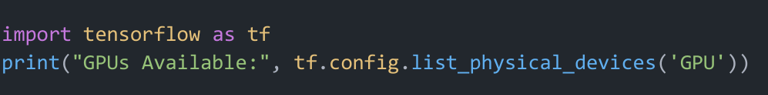

GPU support

Installed NVIDIA CUDA Toolkit for WSL2.

Verified with:

Output:

GPUs Available: [PhysicalDevice(name='/physical_device:GPU:0', device_ty pe='GPU')]

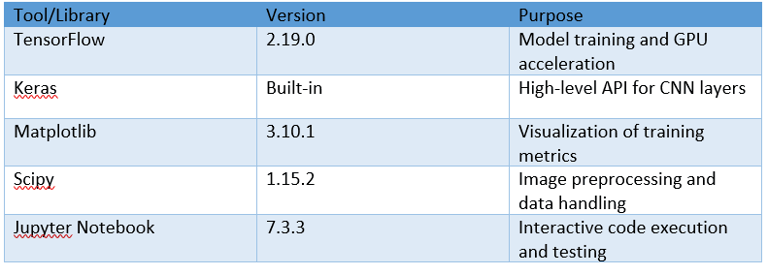

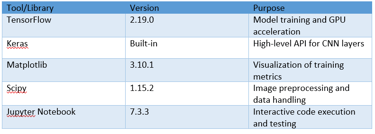

Tools & Libraries

3. Dataset Preparation

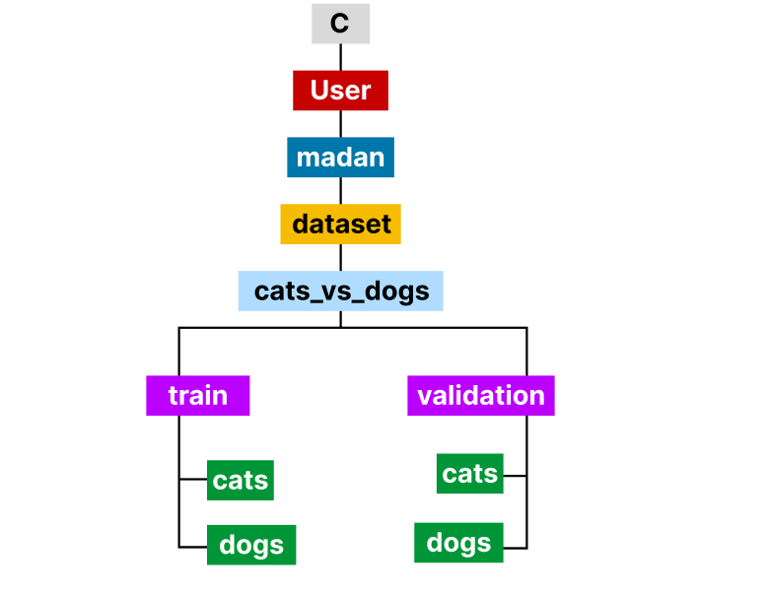

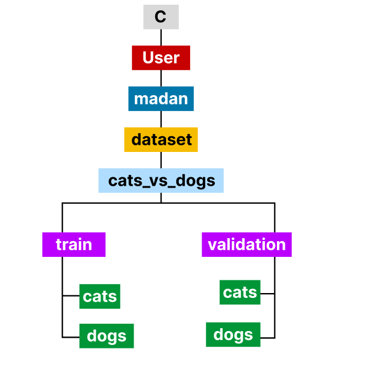

Directory Structure

Also, WSL Access Path:

train_dir = "/mnt/c/Users/madan/datasets/cats_vs_dogs/train"

val_dir = "/mnt/c/Users/madan/datasets/cats_vs_dogs/validation"

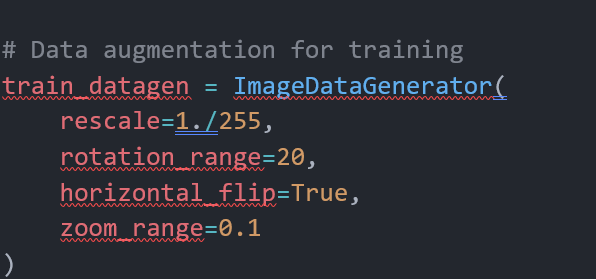

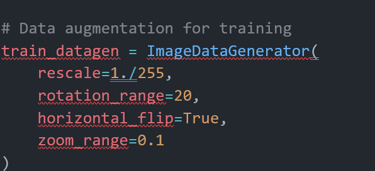

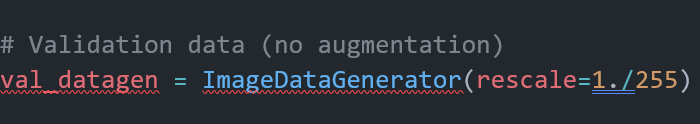

Data Augmentation

Applied to the training dataset to reduce overfitting:

Validation Data: Only rescaled (no augmentation)

4. Model Architecture

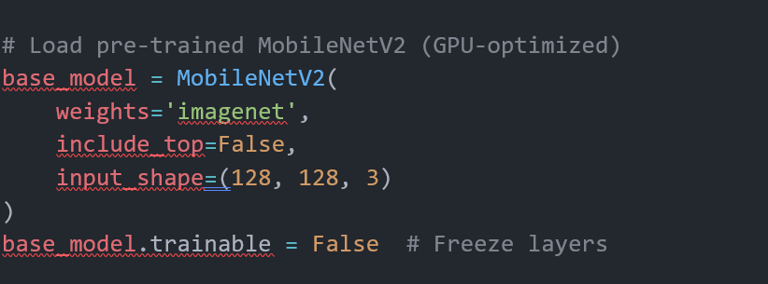

Transfer Learning with MobileNetV2

Base model:

It prepares MobileNetV2 as a feature extractor, keeping its learned knowledge intact. Custom dense layers (from the previous code) will learn only the task-specific parts — like recognizing a new category (e.g., "Is this a cat or a dog?").

Custom layers:

It takes an image, passes it through a pre-trained feature extractor, processes those features with a small custom classifier, and outputs a probability

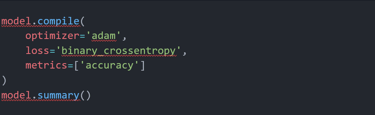

Model Compilation

It compiles the model with the Adam optimizer, a binary classification loss function, and accuracy as the performance metric. During training, the model will use Adam to update the weights and minimize the binary cross-entropy loss, while tracking accuracy.

5. Training & GPU Acceleration

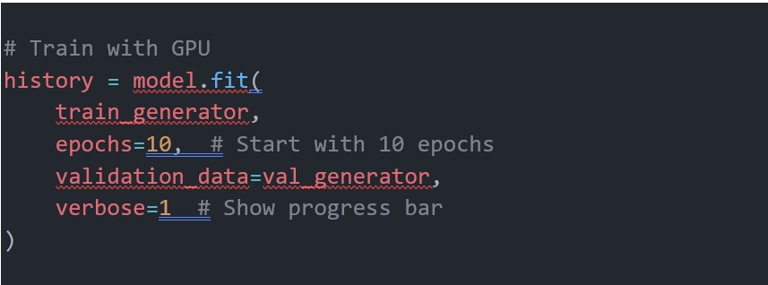

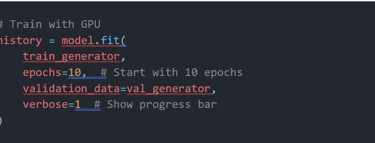

Training Configuration

Batch Size: 64 (optimized for GPU memory).

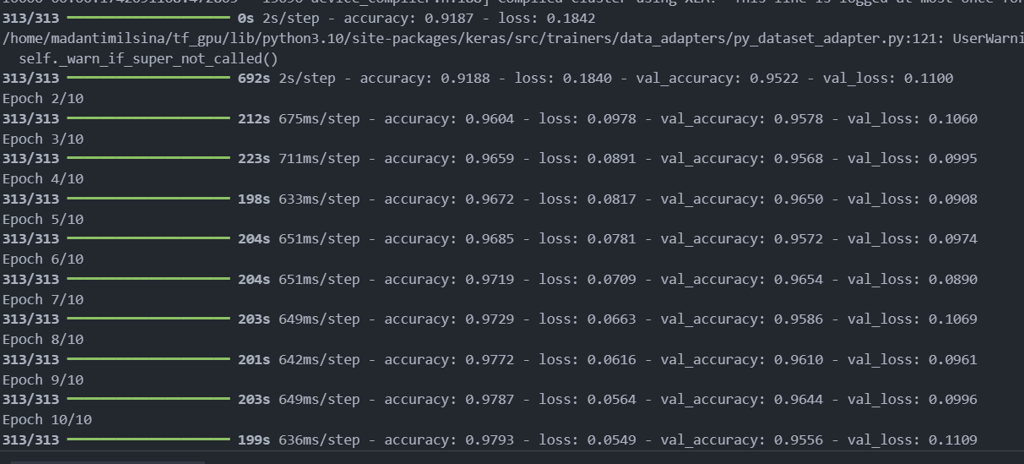

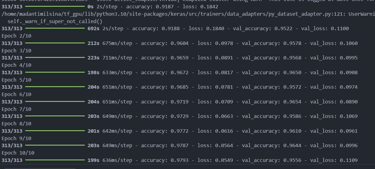

Epochs: 10 (training stabilized after epoch 5).

Workflow:

The model starts training on the training data (train_generator), running for 10 epochs.

GPU Utilization

Performance

CPU: ~15 minutes per epoch

GPU: ~3 minutes per epoch

Verified via WSL terminal:

nvidia-smi # Confirmed GPU usage during training

6. Results & Evaluation

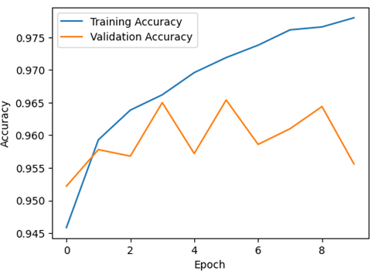

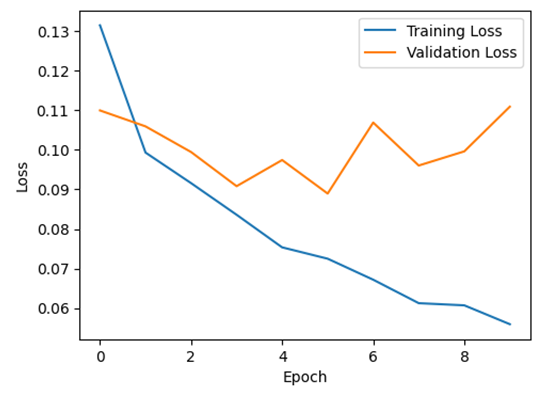

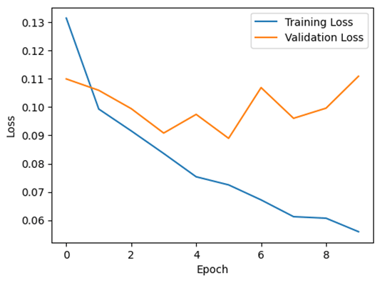

Training Metrics

Screenshot within VS code

Training Curves

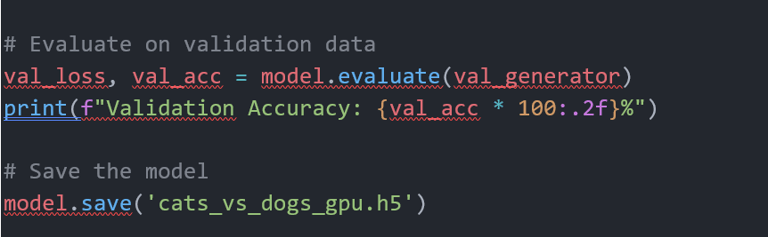

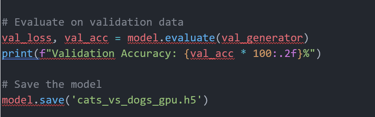

Saving trained model as HDF5

The trained model was successfully trained and trained model is saved as: cats_vs_dogs_gpu.h5

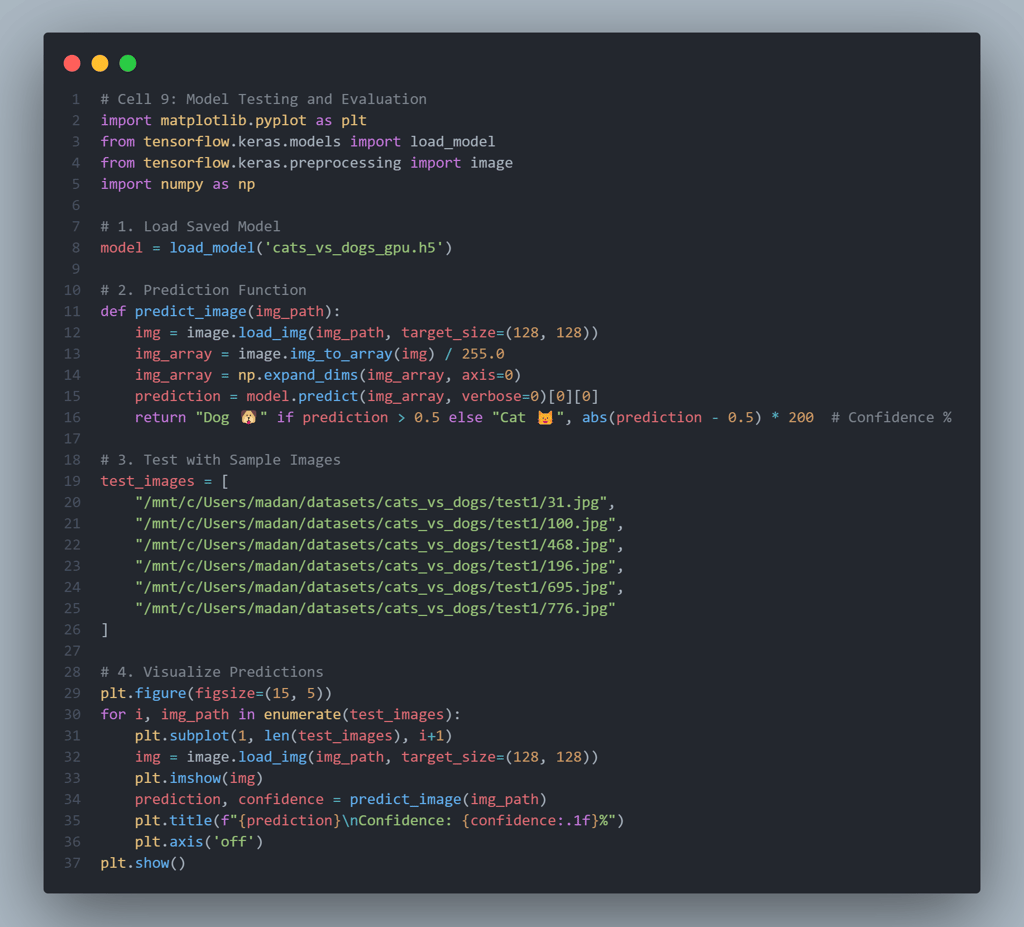

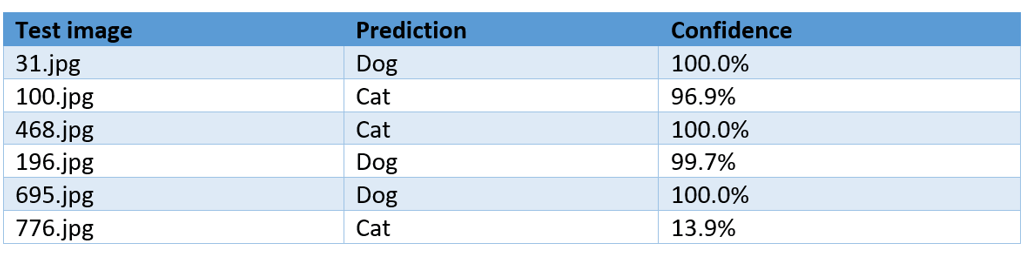

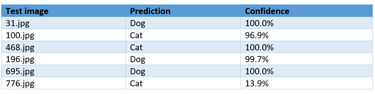

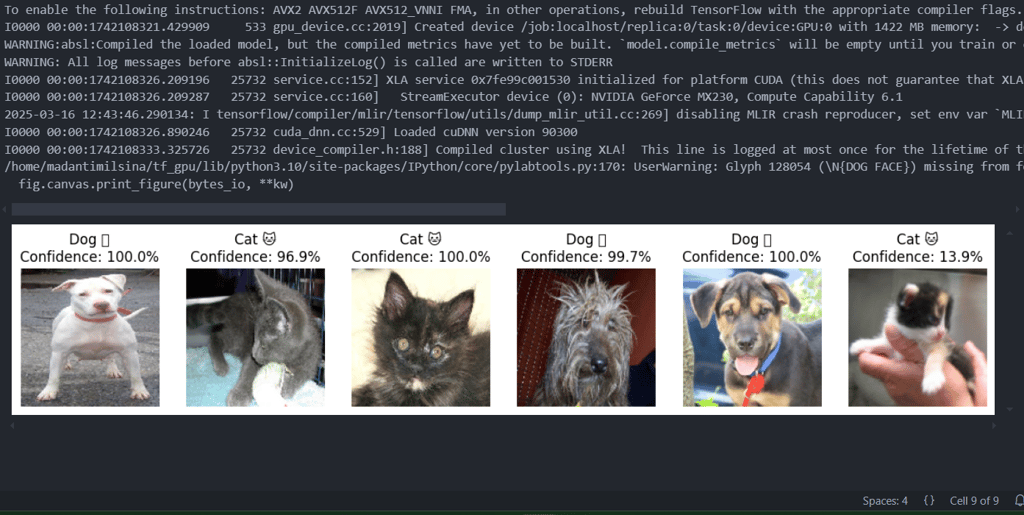

Real-World Testing with images

Six of the images were provided and their respective location were provided. This will use previously trained model: cats_vs_dogs_gpu.h5 to identify whether the provided image is Cat or Dog.

The final output of the code is:

Screenshot of output in VS code:

7. Challenges & Solutions

Issue: Unable to install CUDA & tensorflow and use GPU accelerated processing directly on project destination.

Solution: Followed instruction from: https://www.tensorflow.org/install/pip#windows wsl2 where I got to know about WSL2 and installing Ubuntu on my windows 11 computer. Followed by wsl –install command and installing Ubuntu from Microsoft Store, I connected VS Code to WSL2 environment by using WSL remote extension.

Issue: Dataset missing in local drive C. The Cell 2 in Jupyter notebook was struggling to execute.

Solution: I forgot to update the path for WSL, Artificial intelligence chatbots such as Deepseek and ChatGpt helped me to figure it out because I never knew that after installing Ubuntu, I always need to update the path. This gave me great lesson

Issue: Missing scipy dependency.

Solution: Installed via pip install scipy in the tf_gpu environment.

Issue: NameError: name 'model' not defined.

Solution: Ensured Cell 3 (model definition) was executed before training.

Issue: Slow initial training.

Solution: Increased batch size from 32 to 64 for GPU optimization

8. Key Learnings

GPU acceleration is key for large-scale deep learning. I have faced several problems because of low end CPU. GPU helped me to execute programs faster. I was new to the use of Ubuntu in the Windows operating system. This gave me opportunity to learn how we can also use Linux system within windows to use GPU for faster processing. I have researched and learned to debug cross platform path issues (Windows ↔ WSL). Finally, I learned to used Chatbots for error debugging and understanding the program that it provides.

Video output of the model:

This is the end of the project, Thank you so much for reviewing.